Microsoft in the News

Finally! Microsoft

has added the ability to convert PDF documents into editable Word

documents. Assuming you are using MS

Office 365, you can now open a PDF in Word the same way you would open a Word

doc. You will get a message saying that

it may take a little time to open (converting a lot of graphics takes time) and

that the converted document may not be exactly as shown in the PDF – nothing

new there.

The ability to convert to a Word document will depend on how

the PDF was created. If the PDF is an

image – in other words it was scanned in, then it cannot be converted. On the other hand, if the PDF was created by

saving a Google doc or Word doc as a PDF, then all the required information is

there for conversion.

Life just got a little easier.

ADC – Glossary

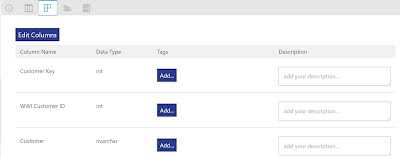

As mentioned in my last post, the glossary allows an

organization to document key business terms and their definitions to create a

custom business vocabulary. This enables

consistency in the data usage across the entire catalog. Once you have set up the terms they can be

used in tagging.

The Glossary can be set up in a hierarchy of items to show

classifications. There is no limit to

the number of levels in a hierarchy, however it is strongly suggested to limit

the levels to fewer than three to keep it easy to understand. It is also acceptable to have no hierarchies

as well by simply leaving the parent term blank. Once you have added a few items you can

review them in the main glossary page.

The left side of the glossary screen shows the terms you

have added

Here we see that a checking account is a child item of the

CIF. I use this example to show a few

things. The Cif is an acronym for

Customer Information File. However, it

is almost never referred to as that in a bank; it is simply called a CIF. This is a good example of how an asset can

then be tagged as a CIF without the entire name being needed. It also standardizes it as CIF, instead of

having the numerous variations that people may come up with such as; cif, C.I.F

etc. This is important when searching

tags. Consistent tags make searches more

consistent.

There is additional information about the terms displayed in

a table. The column sizes of each can be

adjusted to allow you to view as much or as little of each column as you

prefer. Above this table is the filter for the glossary and a Results per

page. As your glossary expands both of

these will become very important in the management of your glossary.

The box to the left of each item can be checked to display

the details of the item, which will be displayed in a panel on the right.

Note at the top of the screen under the main Data Catalog

menu is the glossary menu

New term – allows you to create a new term

Add child – will add a child to an existing term

automatically. This will bring up a new term window with the parent term filled

in with the information of the term you were on when you choose add child.

Add admin – this allows you to add a glossary

administrator. Note that security groups

need to be set up for this to work.

Edit term – will allow you to edit the current term you are

viewing.

Delete – this will delete the current term. Note that if the

term is a parent then all children must be deleted before you can delete the

parent.

Toggle – this button expands and minimizes the detail view

pane.

It is interesting to note that the glossary gives you not

only information about the term but also how it is used and what it is related

to by noting the relationships and assets associated to the term.

Next we will look at how these are used in tags.